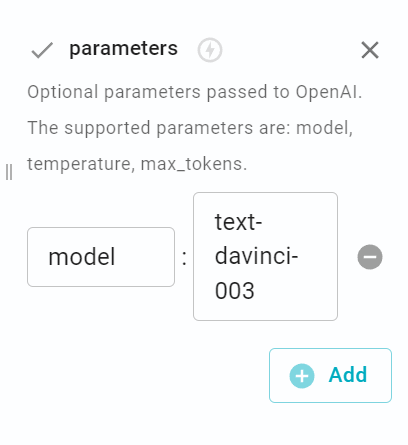

I think the default is using davinci-003?

Hi @bukit we don't have a direct integration with GPT-3.5 yet. Looking at the official API reference for GPT-3.5, it's possible to create a Text Blaze snippet that does that:

{request_body=tojson(["temperature": 0.3, "max_tokens": 256, "model": "gpt-3.5-turbo", "messages": [["role": "user", "content": "Hello!"]]], "{model: string, messages: { role: string, content: string }[], temperature: number, max_tokens: number}")}

{=request_body}

{urlload: https://api.openai.com/v1/chat/completions; method=POST; headers=Content-Type: application/json, Authorization: Bearer <api key>; body={=request_body}; done=(res) -> catch(["result": res, "isloading": no, "haserror" : no], ["name": res, "isloading": no, "haserror": yes]); start=() -> ["isloading": yes]}

{if: isloading}Loading...{else}{response=fromjson(result)["choices"][1]["message"]["content"]}{=response}{endif}

If you find any parameter of this snippet difficult to understand, please let me know. Be sure to replace <api key> your own API key.

If we were to integrate GPT-3.5 officially, what are the features that you would be looking for? Or is this small snippet sufficient for your use cases?

I want to choose optional parameters passed to OpenAI, using the GPT-3.5 turbo model to process openai snippets, Davinci 003 costs too much.

GPT-3.5-turbo $0.002 / 1K tokens

DaVinci 003 $0.02 / 1K tokens

Thank you for the example snippets, I'm going to try it.

There's a new update @ openai, does the OpenAI command pack get an upgrade? like choosing which model to use?

Hi @bukit , which update are you interested in? Please provide relevant link or example ![]()

The chat models are not available via our openai command pack yet. You can refer to the small Text Blaze snippet that I shared with you earlier to use that ![]()

In that snippet, change "model": "gpt-3.5-turbo" to "model": "gpt-4" to get this update.

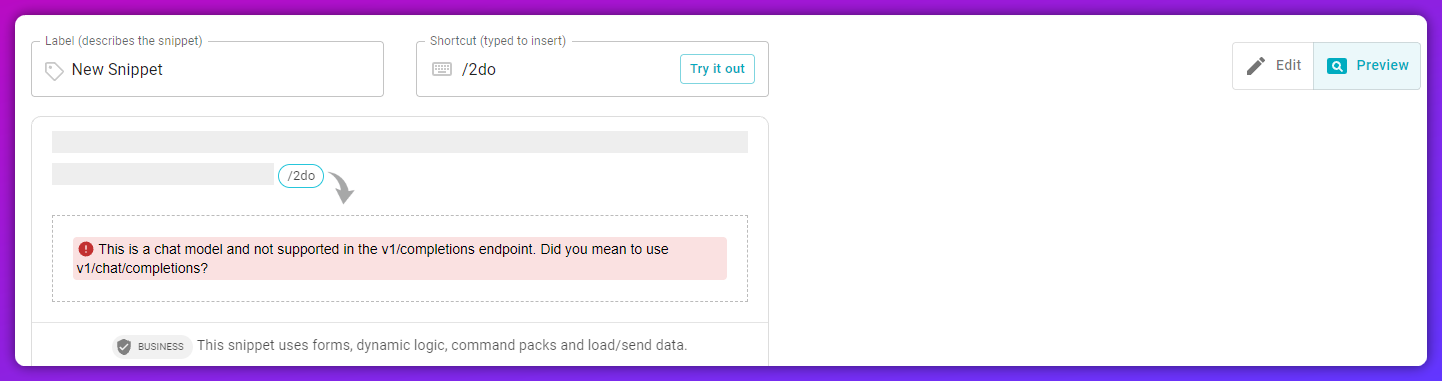

It's not possible to choose "gpt-3.5-turbo" for parameters?

Hey @Daniel_b gpt-3.5-turbo and davinci-003 are different engines (chat completion vs text completion) so it's not enough to just swap out the parameters. You will need to use the snippet that I shared above to use gpt-3.5-turbo. I hope it helps!

unable to use this

Hey @Felix , what is the issue you are facing in using this? Please feel free to email me at gaurang@blaze.today and I'll be happy to help.

How do I add {{clipboard}} to the GPT-4 request snippet? It's not working for me.

I'll respond to your email Mark.

Hi Gaurang,

It looks like OPENAI is deprecating the davinci-003 model and have just released: gpt-3.5-turbo-instruct

I just tried it and it looks like it's working! Maybe you should let the community know ![]()

I just tried and it does work out of the box ![]()

We will switch to this new model soon, thanks for informing us!

We've updated the Text Blaze OpenAI command pack to now use the gpt-3.5-turbo-instruct model.

Hi

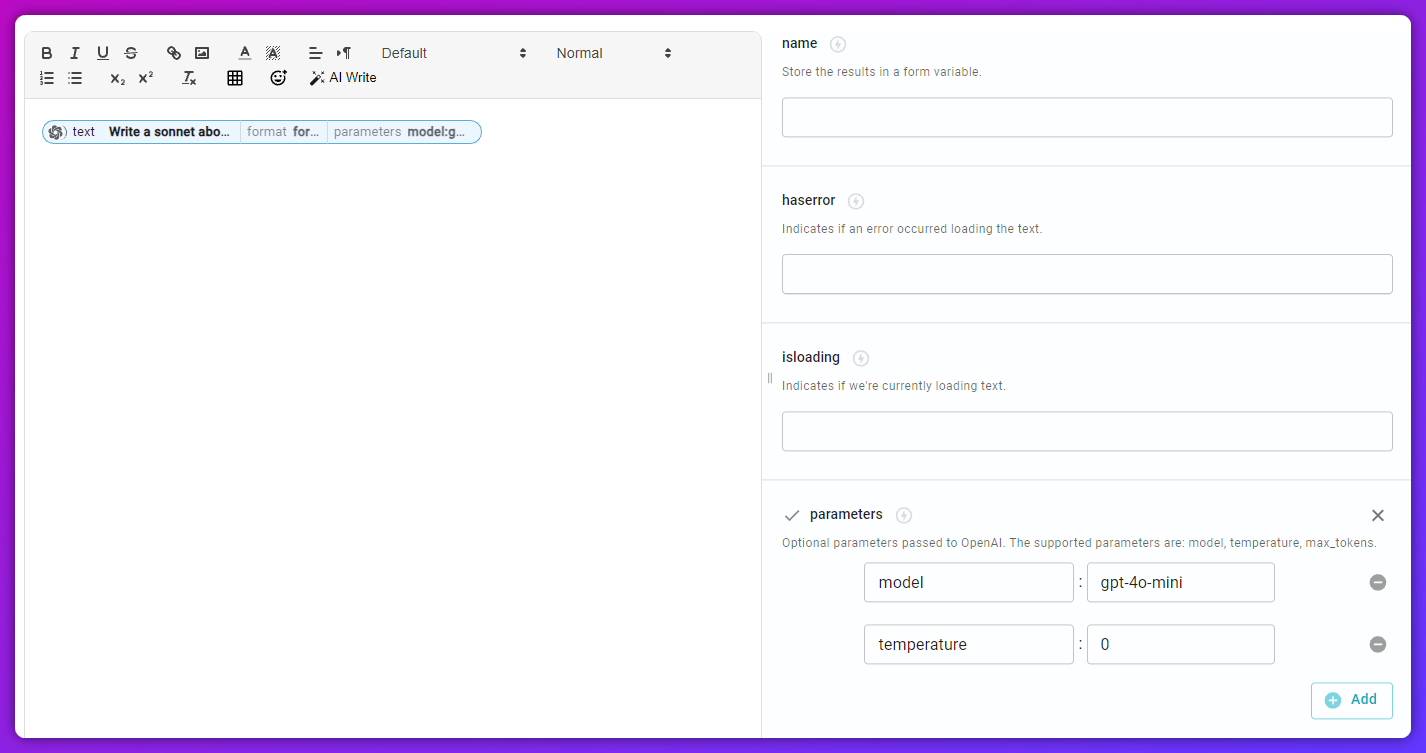

Please update to newer OpenAI endpoint so we can use gpt-4o-mini model and vision capabilities.

https://platform.openai.com/docs/api-reference/completions

https://platform.openai.com/docs/guides/chat-completions

Thank you..

Wirh this we can ask openai to transform a text that we have into the clipboard and get it formated as a table?

@blz AI Blaze can do that: